Iterating the Smart Way (Not the Hype Way): Building Our AI Activity Selector

What started as a hackathon experiment became a production-ready feature, helping customers get the right coverage, without the guesswork.

When someone buys insurance at Insify, one of the most important steps is selecting what their business actually does. It sounds simple, but here's what was happening: users would complete a purchase thinking they were covered only to discover during a claim that they'd selected the wrong activity. They weren't covered at all.

The root of the problem? Our process relied on company data from the Dutch business registry (KVK). But those codes are often outdated. Startups pivot, freelancers expand their scope, and nobody remembers to update KVK. And yet, coverage depends on what’s selected. That’s a risky mismatch.

We thought: what if we could fix this with AI?

The Problem with KVK (and Static Dropdowns)

Here’s how the flow used to work:

1. A customer types in their business name. 2. We fetch their KVK code. 3. We show activities linked to that code. 4. Most people pick from that list — even if it’s no longer accurate.

During user interviews, we learned that the majority of the users never typed anything into the search. They just selected what we showed. And that often meant they weren’t properly covered.

We wanted to give users a safer, smarter alternative without adding complexity to the flow.

Enter the AI Activity Selector

The idea was simple: let users describe their business in their own words, then use AI to recommend the right activities, from our own vetted list, that would ensure proper coverage.

We didn't want to over-engineer this with massive LLMs. So we experimented with lightweight embedding models from Hugging Face, which allowed us to compare customer input with our internal activity catalogue, using vector similarity.

We tested 10+ models, built a minimal MVP, and demoed it at one of our internal hackathons. The response? Let’s make it real.

Cleaning the Mess Before Training the Model

Like all good AI projects, the real work started with data.

We had an activity list. We had user input logs. We had exclusions and risk combinations from different insurance carriers. But none of it was clean or structured for training.

So we rolled up our sleeves.

During a two-day hackathon, 10+ people (from insurance experts to Dutch speakers across the team) helped clean and restructure our activity data. We used LLMs to augment the dataset, ran manual validations on every match, and built a small supervised dataset to benchmark performance.

Only after that did we plug in the model.

The First Version: AI in the Funnel

The first iteration of the AI selector was shipped in May as an optional text box where users could describe their activity. The AI then returned matches from our catalogue.

We immediately saw improvements: users discovered relevant activities they might not have searched for. But we also learned a few hard lessons:

On mobile, people preferred dropdowns over typing.

More suggestions sometimes led to selecting too many activities, which in turn made them unsupported due to risk combinations.

The AI suggested activities across different carriers, which complicated coverage eligibility.

Iterating the Smart Way (Not the Hype Way)

That’s when Augustas stepped in. He rebuilt the logic, not with more LLMs, but with a simple logistic regression model tuned specifically to our needs.

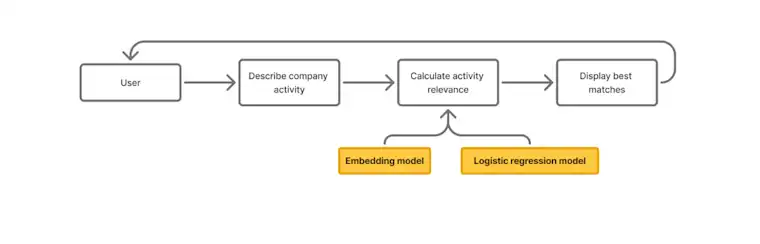

The AI Activity Selector flow: Users describe their business, and we use both embedding and logistic regression models to display the most relevant activities from our catalogue.

We focused on UX: limiting the number of suggestions, showing clearer matches, and improving how we combine activities for eligibility. We also ran a controlled A/B test to measure the impact.

Results?

Fewer users dropped out at the activity step.

40% reduction in “unsupported” cases after activity selection.

And most importantly, customers get the right coverage upfront — no rejection, no back-and-forth.

Why It Matters

Before this project, customers would complete a purchase thinking they were fully protected only to discover during a claim that they weren't. That’s exactly the kind of problem Insify exists to solve.

This tool helps prevent that. It gives users a safer way to describe their business and helps us guide them to the right product, without needing a phone call or a legal background.

It’s not magic. It’s just solid engineering, thoughtful UX, and a team that cares.

What Made It Fun

This wasn’t a project we were told to do. It was a real-world problem we saw and decided to solve. Everyone involved, from data engineers to designers, pitched in during the hackathon and beyond.

It felt like a “real” startup project: we owned it from end to end, tested fast, failed a few times, and made it better.

We weren't handed a spec. We were trusted to figure it out. We didn’t default to hype. We didn’t overfit to buzzwords. We just built something useful, and that’s what made it satisfying.

What’s Next?

We're already exploring how to use AI to guide users through other parts of the insurance journey. Can we help answer questions in real-time when they're comparing policies? Make complex insurance terms clearer as they review coverage? Help them understand what they actually need before they hit "buy"? This project didn't just solve one problem; it showed us what's possible when you iterate smart, not just fast. And we’re proud of it.

Want to build AI that makes a difference? We’re hiring!

Gerelateerde artikelen

InspiratieThe Engineering Working Student Who Fixed a Founding Problem

InspiratieThe Engineering Working Student Who Fixed a Founding ProblemHow one of our student engineers took on a founding problem—and shipped a system that protects thousands of our customers

InspiratieHow Our Hackathon Bot Became a Company-Wide Knowledge Engine

InspiratieHow Our Hackathon Bot Became a Company-Wide Knowledge Engine Using OpenWebUI and AWS Bedrock, we built an internal chatbot that helps teams move faster—with answers grounded in source-of-truth.

InspiratieFrom PowerPoint to P&L: Anton's path from BCG to building Insify's flagship product

InspiratieFrom PowerPoint to P&L: Anton's path from BCG to building Insify's flagship productWe sat down with Anton Lunshof, who spent a decade at BCG before joining Insify as a Product Manager, now Product Director.

InspiratieThe Engineering Working Student Who Fixed a Founding Problem

InspiratieThe Engineering Working Student Who Fixed a Founding ProblemHow one of our student engineers took on a founding problem—and shipped a system that protects thousands of our customers

InspiratieHow Our Hackathon Bot Became a Company-Wide Knowledge Engine

InspiratieHow Our Hackathon Bot Became a Company-Wide Knowledge Engine Using OpenWebUI and AWS Bedrock, we built an internal chatbot that helps teams move faster—with answers grounded in source-of-truth.

InspiratieFrom PowerPoint to P&L: Anton's path from BCG to building Insify's flagship product

InspiratieFrom PowerPoint to P&L: Anton's path from BCG to building Insify's flagship productWe sat down with Anton Lunshof, who spent a decade at BCG before joining Insify as a Product Manager, now Product Director.